Cecilia Alvarez, EMEA Privacy Policy Director, Facebook

The digitalisation of our social exchanges, business activities and interactions with public authorities has been greatly accelerated due to COVID-19 lockdowns and social distance and mobility restrictions. We now work remotely, online; we use digital payment apps instead of cash. We are used to logging in via QR codes through our phone cameras; we send e-greetings, make short videos of our friends as birthday greetings, virtually visit museums and listen to concerts; we even attend university courses online; we register births or deaths remotely and execute legal documents with a digital signature, etc.

Digitalisation has enabled services to be tailored to an individual’s actual or perceived needs. This encompasses from personalised banking to personalised medicine, personalised education, personalised shopping or personalised travel experiences. A significant number of online business models have been based solely or partially on personalised ads, either as part of the core service or as an ancillary feature of another core service. Personalised ads have also financed the provision of certain free services, including email, videoconferencing, search engines, social media, navigation or games, to name a few.

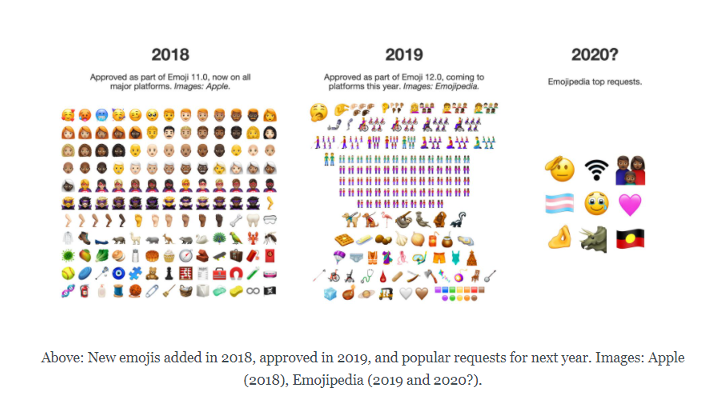

Digitalisation has created its own neologisms that correspond to popular new digital behaviours. We google someone or have an insta moment; we zoom, skype or teams instead of meeting in person; we create a spotify playlist; while some have become an influencer or a youtuber. Our language has expanded to encompass new social norms, such as sending a message in vanish mode, unfriending or accepting someone. For those overwhelmed by all of this, there is a digital detox, which has even given rise to new labour rights, such as the French or Spanish right to be digitally disconnected. Popular culture has been changed forever, with emojis and GIFs that are (audio)visual neologisms that are particularly powerful ways to express emotions as well as gender, age and racial inclusion.

The processing of personal data is an invisible element of the design, implementation and technical maintenance of these activities, involving multiple actors in a complex ecosystem. But rather than rely on a more sophisticated approach based on our skills in history, philosophy and maths, we are tempted to reach for simplistic solutions. To understand this further, I have focused on three particular issues: (i) the unclear role of consent, (ii) the impact of an inflexible approach to data protection, and (iii) the privatisation of justice without social consensus.

The unclear role of consent

Consent is one of several mechanisms under the EU data protection regulations, the GDPR, which legally justify the processing of personal data. Under the GDPR consent is not considered more important than other legal mechanisms. However, we have created complex legislation that nobody fully understands as only ‘data protection experts’ can apparently understand what it means to rely on any legal basis other than consent. To read a privacy policy, and understand whether contractual necessity, vital interests, legitimate interests, or public interests, might apply, requires users to have both the legal acumen and the determination to do so. Further, the GDPR promise that individuals are empowered to control their data, creates false expectations and distrust of organisations that rely on any legal basis other than consent, which is the only one users seem to actually understand.

Further, some data protection authorities in some EU Member States will only consider consent, but also take the view that no consent could actually be valid because there is an asymmetry of power between the service provider and consumer. This would mean the data protection regulator arrogate the right to create a “data protection consent” not governed by civil law (which is not appropriate in my opinion). Asymmetry of power obviously exists by definition in any B2C relationship or any employer-employee relationship, but the rules governing consumer terms and conditions or labour regulations acknowledge the existence of valid consent in these kinds of relationship.

Other EU regulations also pivot around consent and have included very limited exceptions, which are proving to be insufficient in the digital age. After the terror attacks of September 11, 2001 and years of complex and debate on the need, guarantees and costs of retaining personal data on location and traffic, we ended up with the 2006 Data Retention Directive. This was however invalidated by the Court of Justice of the EU in 2014 for lack of proportionality.1 Debate continues on the need to balance privacy of communications with fighting serious crime online, such as child exploitation; even celebrities such as Ashton Kutcher are taking part.2 It is obvious that consent should not pay a role in these debates, since the fight against crime cannot be left to the bad actors’ will and decision.

As opposed to data protection regulations, privacy regulations primarily focus on consent regarding cookies, which explains why we have omnipresent cookie banners. In this case, the legislative focus on consent has proven not to be the most appropriate action to provide effective privacy protection. Data protection authorities identified as early as 2012 cases where cookie consent was not required because the privacy invasion was insignificant, such as first party analytics.3 These cases will be considered as part of the ongoing process to update

EU e-privacy laws. The industry has identified other situations where they believe the focus should be put on safeguards to ensure minimum privacy impact, rather than on insisting on consent.

There are other examples, such as scientific research, which has gained prominence during the COVID-19 pandemic. The GDPR includes a certain number of conditions to enable scientific research that do not include consent, such as providing an exemption to the general prohibition on use of health data, focusing instead on safeguards, including the codification of data. This is the usual way data are protected in clinical trials in the EU, US and Japan. However, some legislators and data protection authorities have nonetheless continued to insist on very narrow consent mechanisms that are not viable to build and implement a national/EU research strategy or to provide legal certainty to investors in pan-European research projects.

Some of these issues are summarised in an excellent CIPL article from 2015 that is still relevant today:4

“(…) We do not believe that consent is the best or only way to empower individuals in this day and age for three reasons. First, consent has become overused and an over-relied-upon in practice, calling into question its function as indicator of meaningful individual choice and control. Privacy policies and notices are too numerous, long and complex to result in valid consent. (…) Second, modern information practices are on a collision course with canonical consent requirements as envisaged in many data privacy laws today. Increasingly, there are situations where consent will simply not work (…) Third, and perhaps most importantly, are other mechanisms in our ever-growing privacy toolkit and existing legal regimes that, in the appropriate contexts, are able to deliver privacy protection and meaningful control more effectively than consent. However, while these alternative mechanisms already exist, they must be better understood, further developed and more broadly accepted.”

Finally, it is worth noting that we are witnessing a convergence of data protection, competition and consumer protection approaches, where individual consent seems to be a common denominator. However, we need to be very careful before borrowing concepts from other regulations, in particular, regarding consent, since its construction and the role it plays is different in each regulation is entirely different.

The impact of an inflexible approach

When I began to study data protection in 1997, I realised that personal data has a dual nature: the moral dimension of any fundamental right, linked to the protection of human dignity, and the commercial aspect linked to economic outcomes. Both aspects must be adapted to the evolution of our culture and social development.

In the EU, fundamental rights and freedoms are protected (including the right to privacy, freedom of information and expression or the freedom to establish a business) by general principles, and infringement must be proved by the affected party through civil and/or criminal court proceedings. Data protection is (as far as I know) the only fundamental right or freedom that the EU and its Member States is protected through administrative laws, with specific administrative bodies (i.e., data protection supervisory authorities) enforcing its protection. On the plus side, this has raised public awareness and created concrete tools to protect human dignity, to prevent horrific abuses of personal data, such as that we witness(ed) by totalitarian regimes. On the negative side, this has led to isolation of data protection rights from the rest of the law. The result has been an over-emphasis on fundamental rights to justify extreme measures, with negative effects on other fundamental rights and freedoms and on the commercial dimension of the data protection right. The rise of populism is impacting the way we envisage and apply our legal instruments. It is increasingly difficult to have a discussion with those who share different views, while some feel the need to adopt an inflexible, extreme approach to data protection, rather than one which is more proportionate and nuanced.

In my professional experience, data protection issues cannot be assessed in isolation – they must be balanced with other laws and interests and in a context that is full of grey areas. These include: contractual laws that determine whether a contract is valid; consumer laws that determine whether consumer terms and conditions are legal; labour laws that set the framework for the rights and duties of employer and employees and the role of trade unions; clinical trial regulations that determine ethical principles for biomedical research and the roles of the different actors in a clinical trial, etc.

Other legitimate interests must also be taken into account. For instance, there is always a balance that needs to be struck in a democratic society between the need for security and safety (e.g., to combat money laundering, terror financing and other serious crimes) and the respect for privacy and other fundamental rights. Data protection requirements must be viable and operational and be assessed considering their social, technical and economic impact. For instance, the link between international data flows and trade and economic and social progress is undeniable. As a European, I am proud that we have built, and exported beyond our borders, a concept of dignity linked to the rule of law in democratic societies. We cannot build and radiate our influence by isolating ourselves.

Privatisation of justice without social consensus

The personalisation trend means that the user is central and no longer a passive recipient of a service. Users actively demand to be listened to by service providers and the authorities. Users have direct communication channels such as personal blogs, social media or other online means to express themselves without intermediaries or editorial filters. The rating by consumers of the quality of any service is now considered of equal or more importance to any communication from the service provider. The democratisation of opinion on any topic has fundamentally changed the character of social communication (before it was limited to broadcast media – newspaper, TV and radio), which has led to a massive increase in conflict arising from user generated content.

Instead of being assessed in courts by experienced magistrates with the guarantee of a judicial due process, some of these conflicts are now being overseen by administrative bodies without such guarantees. Even more concerning is the legislative trend to privatisation of justice, with an expectation that some of these disputes are expected to be dealt with by private companies. An interesting example of this is how the ‘right to be forgotten’ proposal was referred for resolution by private companies, who were obliged to automate internal procedures and policies as much as possible to deal with vastly differing and complex requests on the basis of only broad criteria arising from the Costeja CJEU case.5

Legislators and regulators are not providing the advice and frameworks necessary to deal with these disputes. Despite this, organisations that have created and enforced their own rules for a good online citizen have been criticised by users or regulators.

User empowerment has generally not been accompanied by any consideration of the user’s responsibilities. Some parents use online services as a babysitter, without supervision of their children’s online activities. Even worse, some parents or educators allow children under their custody/supervision to use services not designed for their age, without thought to liability for any harm. As a society, we have not yet worked out how to create digitally responsible and resilient individuals. Nor have we decided what role each stakeholder should play (service providers, users and public authorities) in setting rules to prevent online harms such as digital violence, bullying, hate speech, sexual blackmail, etc. It is encouraging to see that some Data Protection Authorities are considering holding users responsible for the use of third parties’ data, when their actions harm others.

Conclusion

The digitalisation of our social and professional interactions has been accompanied by rapid change in our approach to data protection and privacy. The ‘individual-centric’ trend has enabled personalised services which we can no longer imagine living without. It has also encouraged an egocentric approach that has blurred our view of individual responsibility to our fellow human beings, both the current and future generations and the environment. We cannot just blame others for negative consequences but must embrace our collective responsibility for our own contribution to the problem. We need to work together collaboratively to identify the potential harms in our digital society and economy, who is responsible for them and which part we should each play in mitigating or avoiding them.

There is a role for consent in redefining our social norms online, as a way to avoid the trap of focussing on personal data protection without considering other laws and the full social and economic impact. We are, after all, still only human beings, with all their problems. Technology can and will provide ways to address these problems; to choose the right way forward depends on us learning the lessons of history, protecting our dignity and the rule of law, but not being afraid to be responsibly creative and innovative.

The opinions expressed are purely personal and do not necessarily correspond to the corporate views of any organisation, past or present, for which the author has worked.

1 https://curia.europa.eu/jcms/upload/docs/application/pdf/2014-04/cp140054en.pdf

2 https://www.politico.eu/article/ashton-kutcher-urges-eu-to-pass-interim-privacy-law/

3 https://ec.europa.eu/justice/article-29/documentation/opinion-recommendation/files/2012/wp194_en.pdf

4 Centre for Information Policy Leadership (CIPL) Empowering Individuals Beyond Consent (informationpolicycentre.com)

Cecilia Álvarez Rigaudias has been the EMEA Privacy Policy Director at Facebook since March 2019. From 2015 to 2019, she served as European Privacy Officer Lead of Pfizer, Vice-Chair of the EFPIA Data Protection Group and Chairwoman of IPPC-Europe. For an interim period, she was also the Legal Lead of the Spanish Pfizer subsidiaries. Before that, she worked for 18 years in a major Spanish law firm, leading the data protection, IT and ecommerce areas of practice as well as the LATAM Data Protection Working Group. Cecilia was the Chairwoman of APEP (Spanish Privacy Professional Association) until June 2020 and is currently in charge of its international affairs. She is also the Spanish member of CEDPO (Confederation of European Data Protection Organisations) and a member of the Leadership Council of The Sedona Conference (W-6). She is a member of the Spanish Royal Academy of Jurisprudence and Legislation in the section of Law on ICT as well as Arbitrator of the European Association of Arbitration (ICT section).

Cecilia Álvarez Rigaudias has been the EMEA Privacy Policy Director at Facebook since March 2019. From 2015 to 2019, she served as European Privacy Officer Lead of Pfizer, Vice-Chair of the EFPIA Data Protection Group and Chairwoman of IPPC-Europe. For an interim period, she was also the Legal Lead of the Spanish Pfizer subsidiaries. Before that, she worked for 18 years in a major Spanish law firm, leading the data protection, IT and ecommerce areas of practice as well as the LATAM Data Protection Working Group. Cecilia was the Chairwoman of APEP (Spanish Privacy Professional Association) until June 2020 and is currently in charge of its international affairs. She is also the Spanish member of CEDPO (Confederation of European Data Protection Organisations) and a member of the Leadership Council of The Sedona Conference (W-6). She is a member of the Spanish Royal Academy of Jurisprudence and Legislation in the section of Law on ICT as well as Arbitrator of the European Association of Arbitration (ICT section).